There's probably nothing stopping the wave that is artificial intelligence. That makes it extra important to rein in the tech's alarming energy usage.

Fortunately, a research team at BitEnergy AI may have found a way to dramatically cut down AI's energy requirements, as Interesting Engineering reported.

The secret is a simplified algorithm called Linear-Complexity Multiplication, nicknamed L-Mul, according to the researchers. The current systems AI primarily uses are floating-point multiplications and dot products, which can be precise but also require a high amount of energy because of their complexity.

The researchers said, "Reducing the amount of computation needed by neural networks is the key to reduce both energy consumption and inference speed for large-scale AI models."

Linear-Complexity Multiplication can save 95% in energy use for floating-point multiplications and 80% in dot products, per the study. It's able to do so by subbing in simpler processes that use less energy.

The team at BitEnergy AI says that not only does their method save energy, it works faster while maintaining the same accuracy.

Watch now: Fan-favorite meal kit company debuts products in grocery stores nationwide

"Our experiments indicate that L-Mul outperforms 8-bit transformers with lower computational consumption and achieves lossless performance when applied to computation-intensive attention layers without additional training," they said.

BitEnergy AI's work in the area aligns with other efforts to address AI's energy usage directly and indirectly.

A team at the University of Minnesota Twin Cities is taking on ways to make hardware more efficient. University of Missouri researchers took on data centers' cooling systems as a potential avenue to save energy. To that point, Goldman Sachs estimates AI will grow power demand for data centers by 160% by 2030.

Tech powers have grappled with that extra energy demand in a couple of different ways. Google is leveraging massive batteries that store clean energy to power a huge data center in Arizona. Microsoft recently took the bold step of reopening a unit of Pennsylvania's Three Mile Island nuclear plant.

TCD Picks » Upway Spotlight

No matter how companies power their AI, BitEnergy AI's potential solution to optimize the process is a promising development. It does face some obstacles to widespread adoption, though.

Right now, Linear-Complexity Multiplication's method necessitates specialized hardware that is unavailable. BitEnergy AI is working on that aspect as well as integrating it with AI systems, Interesting Engineering noted.

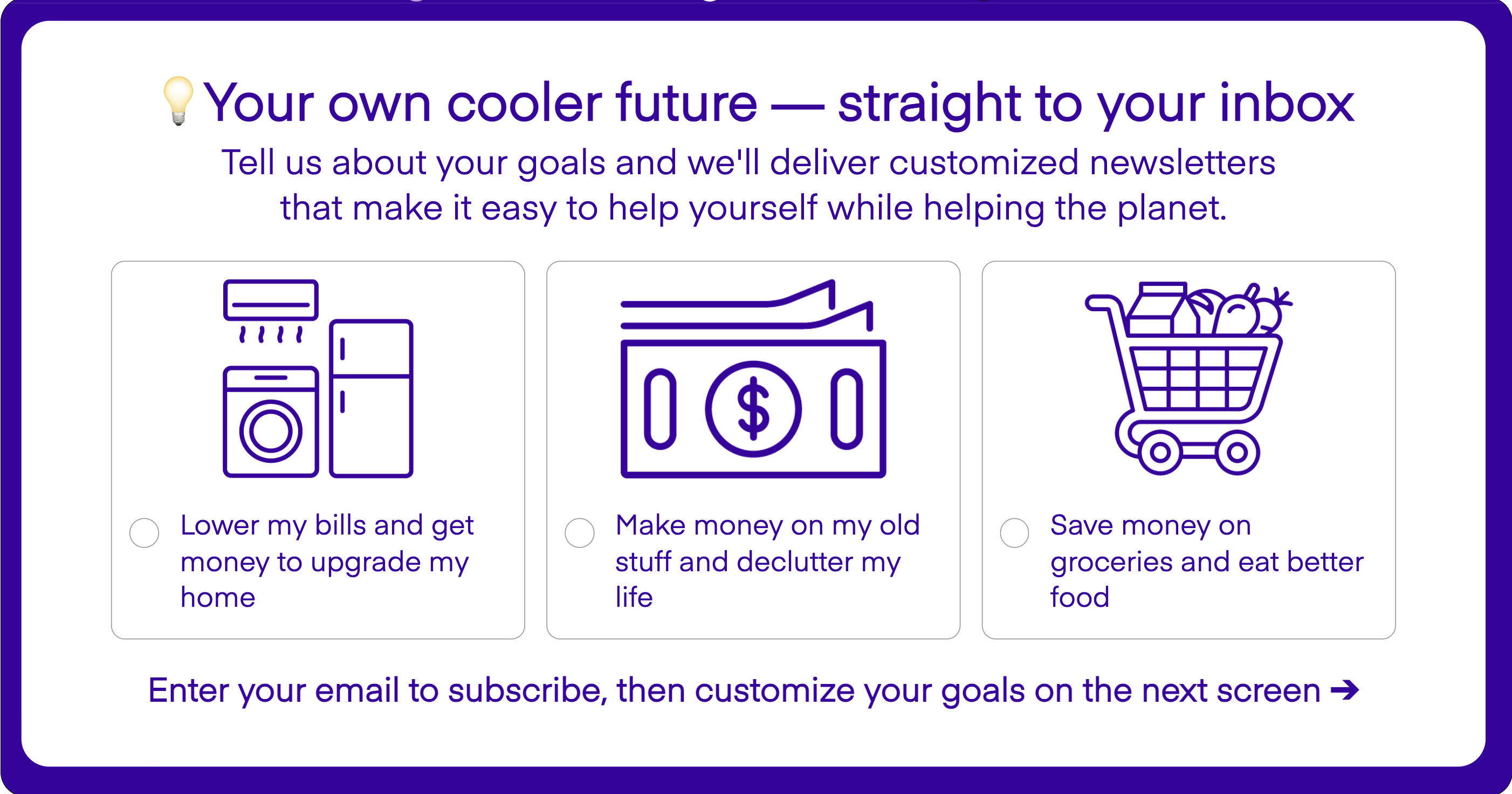

Join our free newsletter for weekly updates on the latest innovations improving our lives and shaping our future, and don't miss this cool list of easy ways to help yourself while helping the planet.