Recently, a small Chinese startup called DeepSeek accomplished the seemingly impossible: It unveiled an AI model that purportedly rivals ChatGPT while using dramatically less computing power. What's more, the company says it was developed with a fraction of the time and cost.

Wall Street responded by dumping energy stocks, betting on a future of lower AI energy demand, and climate activists hailed the development as a sign that AI's efficiency was overcoming its energy projections.

But they're wrong.

Instead, as things stand, we're poised to look back on this moment as one of the biggest catalysts for increasing energy demand in the digital era. DeepSeek's breakthrough is actually bound to unleash an unprecedented surge in power consumption that will dwarf anything we've seen from the first wave of AI.

So, how worried should we be about AI's impact on increasing pollution? Or could AI finally unlock a clean energy future? As a climate tech investor on the front lines of innovation, I see this moment as a critical inflection point for our future.

A staggering feat of cost destruction

DeepSeek's achievement is tremendous: While initial claims that it spent under $6 million on training have been largely debunked, the company still essentially matched ChatGPT's capabilities with significantly less funding and chips by leveraging clever architecture choices and training techniques that squeeze more intelligence out of every calculation. Imagine building a car that runs on the energy of an e-bike — and costs as much to make.

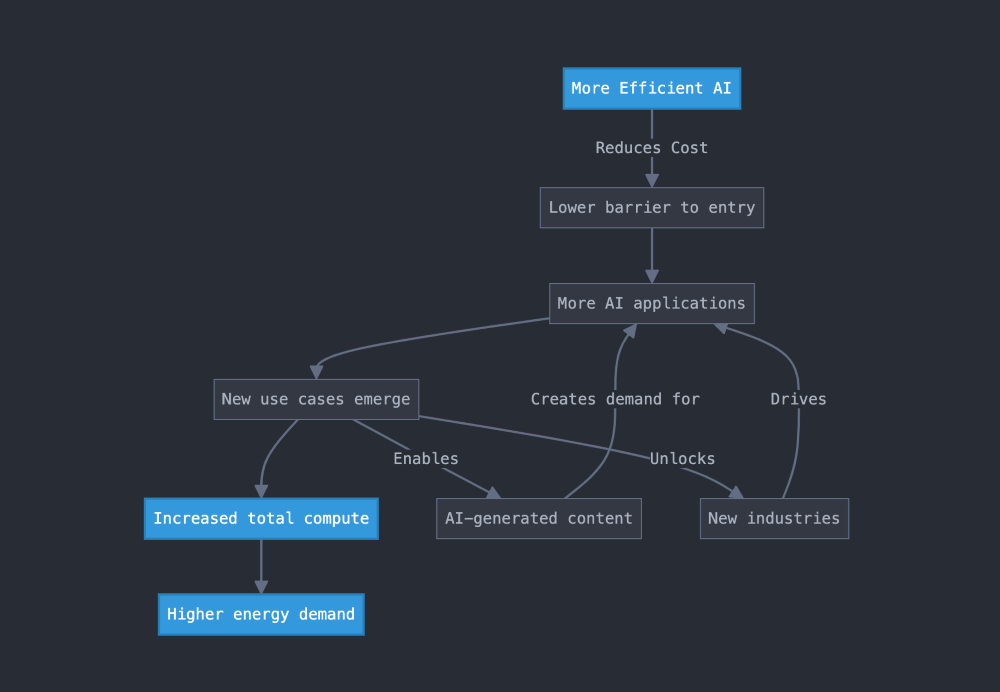

On the energy and climate side, it's understandable that many would assume that this will mean we'll use less energy. But this is a mistake. Cheaper, more efficient, more accessible AI will exponentially increase the use cases for AI, thereby also increasing demand for its inputs.

Watch now: How bad is a gas stove for your home's indoor air quality?

Having a much cheaper model won't change how you and I use ChatGPT, but for developers, this is a game-changer. In other words, as AI becomes more affordable, more businesses will likely shift from seeing it as an unnecessary expense to recognizing its value in daily operations.

The CEO of AI company Perplexity has said it could go from paying OpenAI $4.40 per million tokens — the basic units of text processing that determine AI operating costs — to paying DeepSeek just $0.10 per million tokens. This is almost a 98% reduction in cost and has big ramifications for the kinds of companies that will be built on top of models like these, as well as how many of them get built.

For a robotics company, building and producing robots is expensive. If they can lower the cost of a key ingredient — i.e., artificial intelligence — it could make the whole product more affordable to produce and therefore easier to sell. Cheaper AI means more robots with AI.

TCD Picks » Upway Spotlight

|

Do you think gas stoves should be banned nationwide? Click your choice to see results and speak your mind. |

Across the board, more efficient AI means more AI overall, not less. And exponentially so. That means more energy demand — so much more that it will dwarf any energy savings from using even dramatically more efficient models.

Let's talk about why.

When the coal industry became more efficient, more coal was made

In 1865, economist William Stanley Jevons noticed something counterintuitive about coal-powered steam engines. When engineers made them more efficient, coal consumption went up, not down — which is now called the Jevons paradox. It turned out that increases in efficiency made steam power cheap enough for whole new industries to be born.

Today, we're about to witness the Jevons paradox on an unprecedented scale with AI.

The Jevons paradox is imperfect in some cases. For example, technologies such as LEDs have decreased energy consumption from the lighting sector, even as they increase demand for lights everywhere, all around the world. But AI is the paradox's poster child.

It applies much more aptly to AI than it does to LEDs, hybrid cars, or any other physical product, and there are four key reasons why.

1. AI use cases are still in their infancy: AI is in its early stages, with massive untapped demand across industries and applications that we haven't even imagined yet today. This is unlike lighting when LEDs were introduced, or cars when hybrids came around. An explosion in new use AI cases would drive higher net energy demand, even if individual AI computations became vastly more efficient.

2. AI is unbounded by physical constraints: Whereas LEDs have limited growth potential, AI does not. It can scale indefinitely without demand.

We have a natural, physical ceiling on how many lights we need and want. Even with some significant new use cases — including better TV screens — and new users, LEDs' scalability is limited to physical devices. AI, on the other hand, has no such constraints.

3. AI will produce more AI: AI also creates demand for itself in important ways. Cheaper, more accessible, and ubiquitous AI would lead to AI-generated content, AI assistants, and AI-generated code — in other words, AI would create AI-powered means of production that together generate more AI demand in a feedback loop.

As AI gets cheaper and more efficient, energy demand will almost certainly expand at a pace that outstrips even the most impressive efficiency gains.

4. More players and more games: DeepSeek's model is open-source, meaning it's publicly available, modifiable, and auditable. Rather than being limited to company-chosen use cases, it can be adapted to any use case that anyone out there can think of.

A new report from the International Energy Agency says electricity demand should go up around 4% annually through 2027, driven by AI and electrification. Over the next three years, the increases in electricity consumption are "the equivalent of Japan" each year. But that was before an open-source model blew the AI application layer wide open.

Could lower-energy AI help the clean energy transition?

Is there a version of all this wherein the energy efficiency benefits of DeepSeek buy time for renewables and nuclear power to ramp up just in time to meet the explosion of use cases?

It depends on three key factors: how fast new AI applications emerge; how fast we deploy clean energy, upgrade grids, and roll out today's budding innovations; and whether AI can drive energy breakthroughs. I see three possible scenarios:

Scenario 1: Clean energy catches up

In the first and best-case scenario, AI's energy demands scale gradually enough to give the renewable energy and nuclear industries a chance to adapt and grow.

In this scenario, we're deploying clean energy faster and faster while also making breakthroughs across every clean energy category, from perovskites to unlocking game-changing efficiency gains in solar to next-gen nuclear fission.

Meanwhile, AI would still increase total power demand, but not faster than we can build the clean energy supply. We would achieve less pollution alongside really cool, incredible AI tools and services, rather than runaway pollution that you'd see in the Terminator.

Scenario 2: Dirty fuels stage a comeback

In the second and worst-case scenario — the one I'm most concerned about — AI demand grows so fast that it overwhelms our clean energy resources and causes a dirty fuel resurgence and increased pollution.

If AI becomes 98% cheaper overnight, demand for AI services could surge faster than expected, pushing companies to fall back on the best-known, fastest way to build new energy: burning fossils.

In this scenario, AI not only accelerates emissions growth in the near term but could also lock in new dirty fuel infrastructure for decades to come. We get more pollution and maybe better AI-driven climate modeling to help us understand precisely how screwed we are.

Scenario 3: AI drives the energy revolution

In the third and most techno-utopian scenario, AI helps to solve the energy challenges that it creates — and then some.

AI is already being used to make our energy systems more efficient. It helps balance electricity usage on the grid, improves how energy is distributed, and speeds up research on big energy breakthroughs such as nuclear fusion.

While AI might cause some initial power grid strain and friction, in this scenario, over time, it could drive previously unimaginable advances in energy that far outweigh the cost to get there, and those improvements might be so dramatic that they lead to an energy-abundant future.

To make this scenario happen, though, we need to invest proactively in AI for clean energy, a good opportunity for climate investors. And while Nvidia's Jensen Huang has suggested this could happen, this scenario is a really big "if."

The AI acceleration has begun

Whether it's DeepSeek or OpenAI or Meta with another open-source model, it's clear that AI innovation is happening at a pace that's surprising even insiders.

If the future moves toward open-source models, which looks likely, then we'll see an even bigger and faster proliferation of developers, applications, and demand.

Regardless of which model, which company, or which country wins, AI at its core is an infinitely scalable machine that transforms megawatts into computational and geopolitical power. DeepSeek's breakthrough demonstration won't change what AI is or does — it will simply accelerate its usage.

This acceleration brings us to a crossroads of two revolutions: AI and clean energy. The decisions we make in the next 24 months will determine whether these forces amplify or undermine each other.

When I reflect on my own minuscule role in all of this, I'm both proud of the companies we've backed — including Swift Solar, Euclid Power, and Bedrock Energy, among others, which are all run by kind, dedicated, and brilliant clean energy entrepreneurs — and soothed by their very existence. That they are excellent and succeeding despite the gravitational pull that tugs on every startup gives me hope that smart humans with good intentions still have a shot to shape our trajectory.

We may be skeptical of techno-optimists, but right now we need them to be right so that we are ready to withstand any new major upticks in energy usage.

Susan Su is a partner at Toba Capital focused on backing climate tech entrepreneurs working to decarbonize our global economy and build a climate-positive future. She is the author of the newsletter "Climate Money" (subscribe here) and host of the podcast Climate on the Edge. She is also an investor in The Cool Down.

Join our free newsletter for good news and useful tips, and don't miss this cool list of easy ways to help yourself while helping the planet.