Writing a term paper, crafting a cover letter, pitching an idea — for better or worse, AI can help with that. But if you're going to cheat, at least make sure you delete the prompt from your final product.

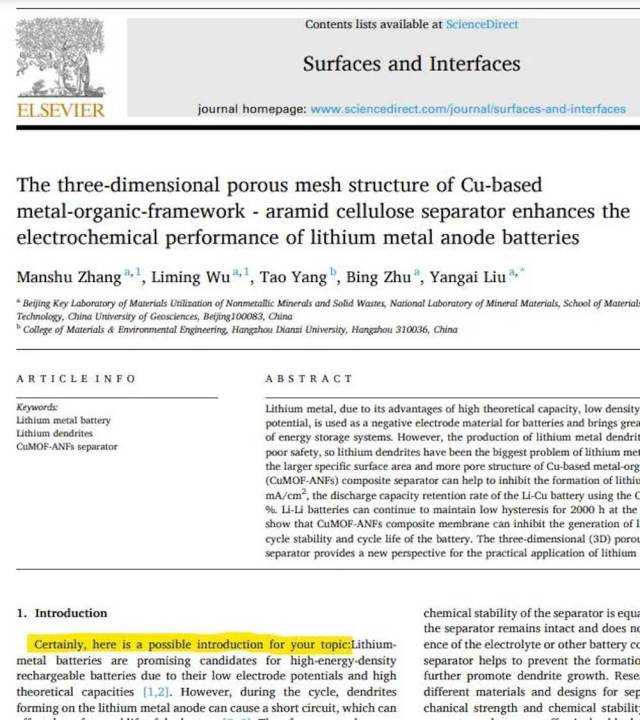

If artificial intelligence indeed is going to be the death of us all, this may be one of the first concrete examples that foreshadows the end. A peer-reviewed study published in Surfaces and Interfaces included an "obvious ChatGPT prompt reply," as a Redditor shared.

The problem was clear from the first words of the paper: "Certainly, here is a possible introduction for your topic."

The journal later retracted the work, but not without controversy.

"There are concerns that the authors appear to have used a Generative AI source in the writing process of the paper without disclosure, which is a breach of journal policy," it stated. "The journal sincerely regrets that these issues were not detected during the manuscript screening and evaluation process and apologies are offered to readers of the journal."

Most importantly, perhaps, it raises questions about just what we're doing with AI. The issues were not detected because the paper was not screened or evaluated; that much is painfully evident. But the authors must be held to some professional standard, too. Passing off work that is not your own has long been grounds for expulsion, termination, and humiliation.

Many schools and publications employ AI detection methods with their plagiarism scans these days, but those scans are not foolproof. The most feared negative outcomes of AI use seem to be well beyond the horizon, but maybe they're not if many have already let go of the wheel.

The dumbing down of academic study is no small worry. If institutions of higher learning deem it acceptable to turn over their rigorous pursuits to robots, the masses are sure to follow.

Such a brain drain will be accompanied by the depletion of natural resources, which are required in massive amounts to power data centers and supercomputers, without which AI could not function.

🗣️ Do you worry about companies having too much of your personal data?

🔘 Absolutely 👍

🔘 Sometimes 🤔

🔘 Not really 👎

🔘 I'm not sure 🤷

🗳️ Click your choice to see results and speak your mind

Google and other companies are siphoning valuable water and emitting heat-trapping polluting gases into the atmosphere to offer these questionably valuable services to the world, and AI is prone to producing misinformation without the commonsense checks and balances that come from human-generated knowledge.

"I was hoping the future would be more 'we have cool flying cars' Blade Runner and less 'people are trained in the process of spotting a robot pretending to be a human' Blade Runner," one commenter wrote.

"How did the reviewers or publishers not catch this?!" another user wondered, while someone else said: "It's problematic on so many levels — these are people ultimately entrusted to be experts. Everyone faking everything lol how would we know?"

Join our free newsletter for weekly updates on the latest innovations improving our lives and shaping our future, and don't miss this cool list of easy ways to help yourself while helping the planet.